An Object-Dependent Hand Pose Prior from Sparse Training Data

Henning Hamer, Juergen Gall, Thibaut Weise, and Luc Van Gool

Abstract

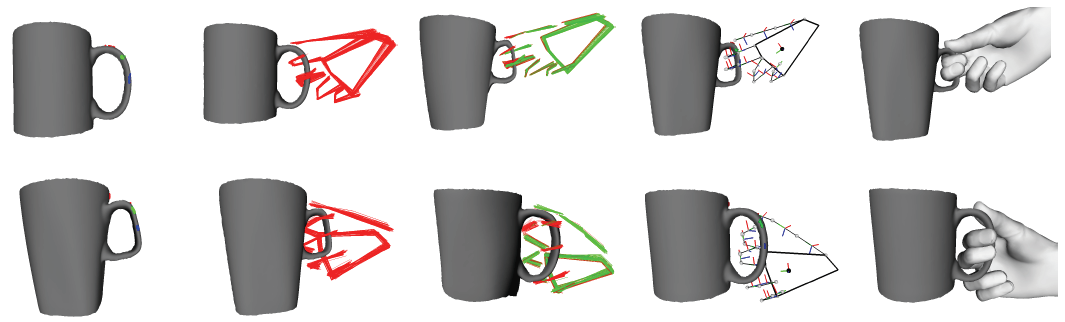

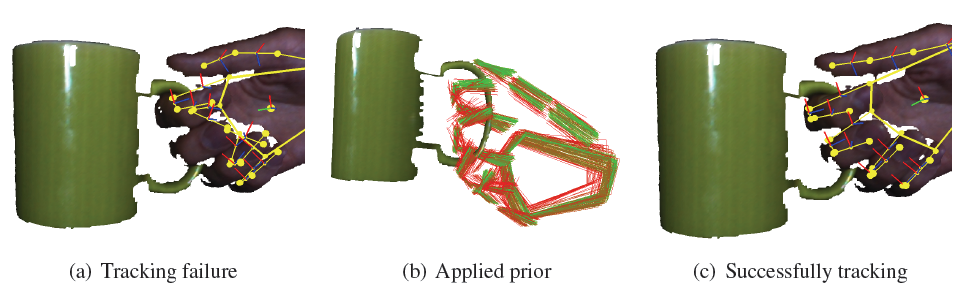

In this paper, we propose a prior for hand pose estimation that integrates the direct relation between a manipulating hand and a 3d object. This is of particular interest for a variety of applications since many tasks performed by humans require hand-object interaction. Inspired by the ability of humans to learn the handling of an object from a single example, our focus lies on very sparse training data. We express estimated hand poses in local object coordinates and extract for each individual hand segment, the relative position and orientation as well as contact points on the object. The prior is then modeled as a spatial distribution conditioned to the object. Given a new object of the same object class and new hand dimensions, we can transfer the prior by a procedure involving a geometric warp. In our experiments, we demonstrate that the prior may be used to improve the robustness of a 3d hand tracker and to synthesize a new hand grasping a new object. For this, we integrate the prior into a unified belief propagation framework for tracking and synthesis.

Images/Videos

Contact, Prior, Warped Prior, Synthesized Grasp, and Artificial Hand

Video ~20MB (AVI)

Data

Hand-Object Interaction (HOI) Dataset

If you have questions concerning the dataset, please contact Juergen Gall.

Publications

Hamer H., Gall J., Weise T., and van Gool L., An Object-Dependent Hand Pose Prior from Sparse Training Data (PDF), IEEE Conference on Computer Vision and Pattern Recognition (CVPR'10), 2010. ©IEEE