Discovering Object Classes from Activities

Abhilash Srikantha and Juergen Gall

Abstract

In order to avoid an expensive manual labelling process or to learn object classes autonomously without human intervention, object discovery techniques have been proposed that extract visually similar objects from weakly labelled videos. However, the problem of discovering small or medium sized objects is largely unexplored. We observe that videos with activities involving human-object interactions can serve as weakly labelled data for such cases. Since neither object appearance nor motion is distinct enough to discover objects in such videos, we propose a framework that samples from a space of algorithms and their parameters to extract sequences of object proposals. Furthermore, we model similarity of objects based on appearance and functionality, which is derived from human and object motion. We show that functionality is an important cue for discovering objects from activities and demonstrate the generality of the model on three challenging RGB-D and RGB datasets.

Images

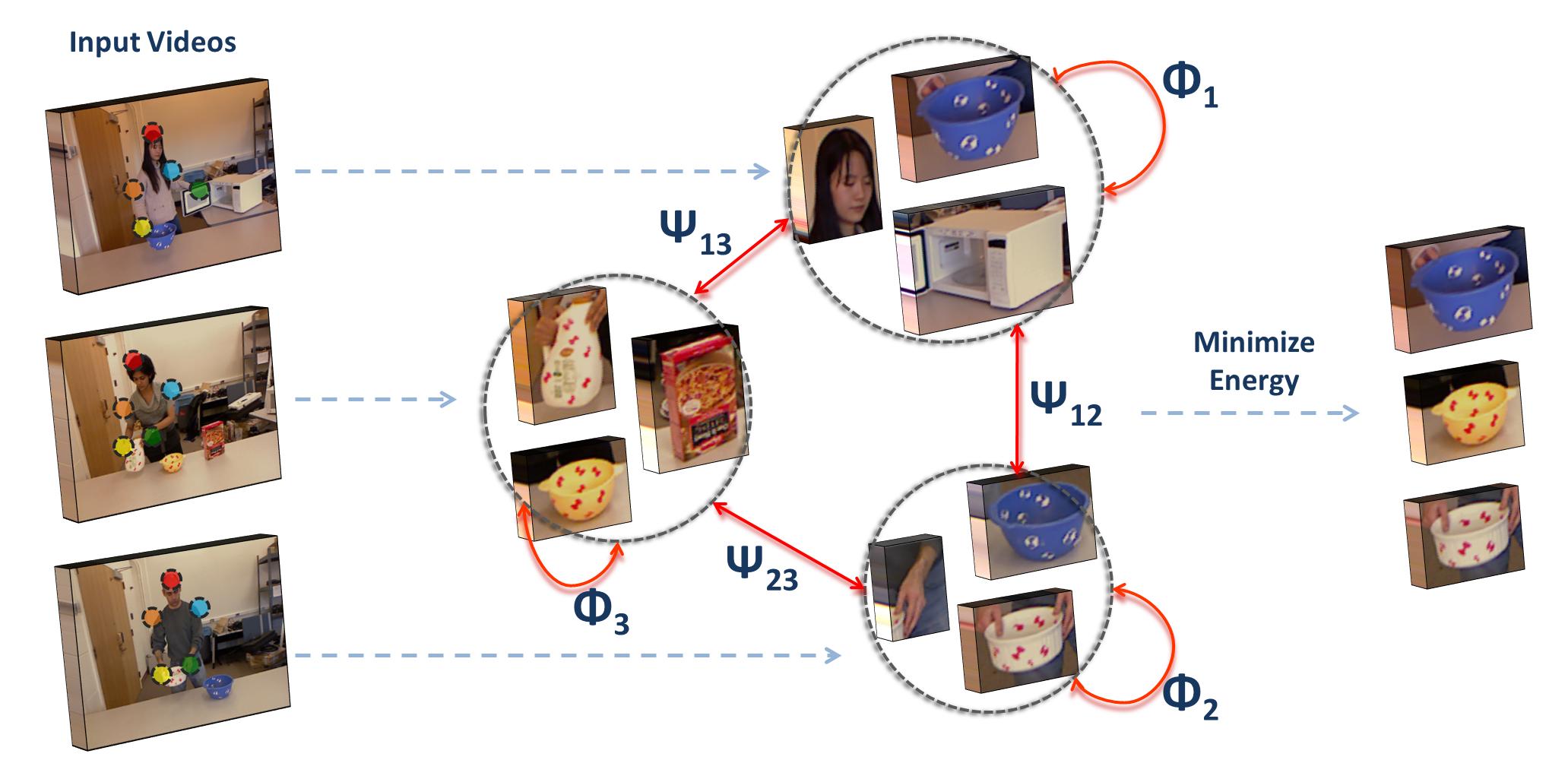

Processing pipeline: Input is a set of action videos with human pose. Multiple sequences of object proposals (tubes) are generated from each video. By defining a model that encodes the similarity between tubes in terms of appearance and object functionality, instances of the common object class are discovered.

Data

If you have questions concerning the data, please contact Abhilash Srikantha.

Publications

Srikantha A. and Gall J., Weak Supervision for Detecting Object Classes from Activities (PDF), Computer Vision and Image Understanding, Special Issue on Image and Video Understanding in Big Data, Elsevier, To appear.

Srikantha A. and Gall J., Discovering Object Classes from Activities (PDF), European Conference on Computer Vision (ECCV'14), Springer, LNCS 8694, 415-430, 2014. ©Springer-Verlag